The Internet was the result of some visionary

thinking by people in the early 1960s who saw great potential value in allowing

computers to share information on research and development in scientific and

military fields. J.C.R. Licklider of MIT, first proposed a global network of

computers in 1962, and moved over to the Defense Advanced Research Projects

Agency (DARPA) in late 1962 to head the work to develop it. Leonard Kleinrock

of MIT and later UCLA developed the theory of packet switching, which was to form

the basis of Internet connections. Lawrence Roberts of MIT connected a

Massachusetts computer

with a

California

computer in 1965 over dial-up telephone lines. It showed the feasibility of

wide area networking, but also showed that the telephone line's circuit

switching was inadequate. Kleinrock's packet switching theory was confirmed.

Roberts moved over to DARPA in 1966 and developed his plan for ARPANET. These

visionaries and many more left unnamed here are the real founders of the

Internet.

The Internet, then known as ARPANET, was

brought online in 1969 under a contract let by the renamed Advanced Research

Projects Agency (ARPA) which initially connected four major computers at

universities in the southwestern US (UCLA, Stanford Research Institute, UCSB,

and the University

of Utah). The contract

was carried out by BBN of Cambridge, MA under Bob Kahn and went online in

December 1969. By June 1970, MIT, Harvard, BBN, and Systems Development Corp

(SDC) in Santa Monica, Cal. were added. By January 1971, Stanford,

MIT's Lincoln Labs, Carnegie-Mellon, and Case-Western Reserve U were added. In

months to come, NASA/Ames, Mitre, Burroughs, RAND,

and the U of Illinois plugged in. After that, there were far too many to keep

listing here.

The Internet was designed in part to provide

a communications network that would work even if some of the sites were

destroyed by nuclear attack. If the most direct route was not available, routers would

direct traffic around the network via alternate routes.

The early Internet was used by computer

experts, engineers, scientists, and librarians. There was nothing friendly

about it. There were no home or office personal computers in those days, and

anyone who used it, whether a computer professional or an engineer or scientist

or librarian, had to learn to use a very complex system.

E-mail was adapted for ARPANET by Ray

Tomlinson of BBN in 1972. He picked the @ symbol from the available symbols on

his teletype to link the username and address. The telnet protocol,

enabling logging on to a remote computer, was published as a Request for

Comments (RFC) in 1972. RFC's are a means of sharing developmental work

throughout community. The ftp protocol,

enabling file transfers between Internet sites, was published as an RFC in

1973, and from then on RFC's were available electronically to anyone who had

use of the ftp protocol.

The Internet matured in the 70's as a result

of the TCP/IP

architecture first proposed by Bob Kahn at BBN and further developed by Kahn

and Vint Cerf at Stanford and others throughout the 70's. It was adopted by the

Defense Department in 1980 replacing the earlier Network Control Protocol (NCP)

and universally adopted by 1983.

The Unix to Unix Copy Protocol (UUCP) was

invented in 1978 at Bell Labs. Usenet was started in 1979 based on UUCP.

Newsgroups, which are discussion groups focusing on a topic, followed,

providing a means of exchanging information throughout the world . While Usenet

is not considered as part of the Internet, since it does not share the use of

TCP/IP, it linked unix systems around the world, and many Internet sites took

advantage of the availability of newsgroups. It was a significant part of the

community building that took place on the networks.

Similarly, BITNET (Because It's Time Network)

connected IBM mainframes around the educational community and the world to

provide mail services beginning in 1981. Gateways were developed to

connect BITNET with the Internet and allowed exchange of e-mail, particularly

for e-mail discussion lists.

In 1986, the National Science Foundation

funded NSFNet as a cross country 56 Kbps backbone for the Internet. They

maintained their sponsorship for nearly a decade, setting rules for its

non-commercial government and research uses.

As the commands for e-mail, FTP, and telnet were

standardized, it became a lot easier for non-technical people to learn to use

the nets. It was not easy by today's standards by any means, but it did open up

use of the Internet to many more people in universities in particular. Other

departments besides the libraries, computer, physics, and engineering

departments found ways to make good use of the nets--to communicate with

colleagues around the world and to share files and resources.

In 1991, the first really

friendly interface to the Internet was developed at the University of Minnesota.

The University wanted to develop a simple menu system to access files and

information on campus through their local network. A debate followed between

mainframe adherents and those who believed in smaller systems with client-server

architecture. The mainframe adherents "won" the debate initially,

but since the client-server advocates said they could put up a prototype very

quickly, they were given the go-ahead to do a demonstration system. The

demonstration system was called a gopher after the U

of Minnesota mascot--the golden gopher. The gopher proved to be very prolific,

and within a few years there were over 10,000 gophers around the world. It

takes no knowledge of unix or computer architecture to use. In a gopher system,

you type or click on a number to select the menu selection you want.

In 1989 another significant

event took place in making the nets easier to use. Tim Berners-Lee and others

at the European Laboratory for Particle Physics, more popularly known as CERN,

proposed a new protocol for information distribution. This protocol, which

became the World Wide Web in 1991, was based on hypertext--a system of

embedding links in text to link to other text, which you have been using every

time you selected a text link while reading these pages. Although started

before gopher, it was slower to develop.

The development in 1993 of the graphical browser Mosaic by Marc

Andreessen and his team at the National

Center For Supercomputing Applications (NCSA) gave the protocol its big

boost. Later, Andreessen moved to become the brains behind Netscape Corp., which produced the most

successful graphical type of browser and server until Microsoft declared war and developed its

Microsoft Internet Explorer.

Since the Internet was initially funded by the

government, it was originally limited to research, education, and government

uses. Commercial uses were prohibited unless they directly served the goals of

research and education. This policy continued until the early 90's, when

independent commercial networks began to grow. It then became possible to route

traffic across the country from one commercial site to another without passing

through the government funded NSFNet Internet backbone.

Delphi was the first national commercial online service to

offer Internet access to its subscribers. It opened up an email connection in

July 1992 and full Internet service in November 1992. All pretenses of

limitations on commercial use disappeared in May 1995 when the National Science

Foundation ended its sponsorship of the Internet backbone, and all traffic

relied on commercial networks. AOL, Prodigy, and CompuServe came online. Since

commercial usage was so widespread by this time and educational institutions

had been paying their own way for some time, the loss of NSF funding had no

appreciable effect on costs.

Today, NSF funding has moved beyond

supporting the backbone and higher educational institutions to building the

K-12 and local public library accesses on the one hand, and the research on the

massive high volume connections on the other.

Microsoft's full scale entry into the browser, server,

and Internet Service Provider market completed the major shift over to a

commercially based Internet. The release of Windows 98 in June 1998 with the

Microsoft browser well integrated into the desktop shows Bill Gates'

determination to capitalize on the enormous growth of the Internet. Microsoft's

success over the past few years has brought court challenges to their

dominance. We'll leave it up to you whether you think these battles should be

played out in the courts or the marketplace.

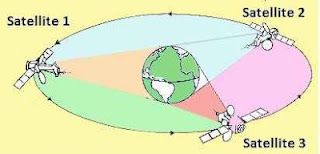

A current trend with major implications for

the future is the growth of high speed connections. 56K modems and the

providers who support them are spreading widely, but this is just a small step

compared to what will follow. 56K is not fast enough to carry multimedia, such

as sound and video except in low quality. But new technologies many times

faster, such as cablemodems, digital subscriber lines (DSL), and satellite

broadcast are available in limited locations now, and will become widely

available in the next few years. These technologies present problems, not just

in the user's connection, but in maintaining high speed data flow reliably from

source to the user. Those problems are being worked on, too.

During this period of enormous growth,

businesses entering the Internet arena scrambled to find economic models that

work. Free services supported by advertising shifted some of the direct costs

away from the consumer--temporarily. Services such as Delphi

offered free web pages, chat rooms, and message boards for community building.

Online sales have grown rapidly for such products as books and music CDs and

computers, but the profit margins are slim when price comparisons are so easy,

and public trust in online security is still shaky. Business models that have

worked well are portal sites, that try to provide everything for everybody, and

live auctions. AOL's acquisition of Time-Warner was the largest merger in

history when it took place and shows the enormous growth of Internet business!

The stock market has had a rocky ride, swooping up and down as the new

technology companies, the dot.com's encountered good news and bad. The decline

in advertising income spelled doom for many dot.coms, and a major shakeout and

search for better business models is underway by the survivors.

It is becoming more and more clear that many

free services will not survive. While many users still expect a free ride,

there are fewer and fewer providers who can find a way to provide it. The value

of the Internet and the Web is undeniable, but there is a lot of shaking out to

do and management of costs and expectations before it can regain its rapid growth.

Celebrity Authors’ Secrets - The World’s Greatest Living Authors Reveal How They Sell Millions of Books

Celebrity Authors’ Secrets - The World’s Greatest Living Authors Reveal How They Sell Millions of Books